He signed it; the world celebrated and embraced their innovation. Hoping to be another “flying cars” fad of a forgotten future’s past — the phase never faded. There's a loud crashing sound from the front door as it splinters into shards — they promised it posed no threat. It's the middle of the night on a Tuesday; the door is obliterated and high-pitched mechanical churning sears through the metallic-laced air — they told us that we would be safe; safer even. From the smoldering darkness emerges a familiar figure — they said it would be a better way of life. Staring into the soulless windows of artificial eyes; it's the reincarnation of this universe’s Terminator, but you’ve been waiting for it.

Waiting for ages, because that is about how far away real robotics are from anything as autonomous as a Terminator, but that doesn’t mean that we aren’t already within a generation or two from having robotics that actually provide a benefit to humanity.

Some would argue that the robotic revolution is already happening. Eighth graders are winning awards for robots they’ve built; physics professors are being awarded grants for self-guided, autonomous robots; advancements in medicine have synced neurons to computer circuits, creating a “technopathic” communication network between brain and machine that is also capable of mobilizing bionic limbs, and Uber has created cars that drive themselves about as well as a teenager with a learner’s permit. But the bigger question remains: Where is all of this truly going?

"Body scanners depicted in the first Total Recall movie during 1990 became installed in almost every major airport across the United States just two decades after its debut — what's to say that the terrors of the Robotic Revolution won't be the same?"

While no one truly knows what the future of robots and artificial intelligence (A.I.) will look like, many worry that science fiction could turn into reality, as it has done in the past. Body scanners depicted in the first Total Recall movie during 1990 became installed in almost every major airport across the United States just two decades after its debut. What’s to say that the terrors of the Robotic Revolution won’t be the same?

“Science-fiction is the lie that tells the truth” according to Jonathan Nolan (director of Westworld), in Do You Trust This Computer, the documentary directed by Chris Paine. Both civilians and experts alike share a common deep-rooted fear of these robotic organisms, and despite this widespread anxiety, advancement continues at an exponential rate. Government-contracted military corporations have leveraged an unparalleled advancement in robotic technology — against the public's wishes.

"The results indicate a global consensus towards these robotic advancements, even across cultural divides."

One such example is the creation of Lethal Autonomous Weapons Systems (LAWS), or “killer robots” as they are more affectionately known to the silver screen. It’s no secret that the overall global population disapproves of the use and development of those automated weapons, mainly out of fear they would be misused by corrupt governments, hacked by radicals, or malfunction and kill innocent civilians. How can they know a soldier from a child with a water gun?

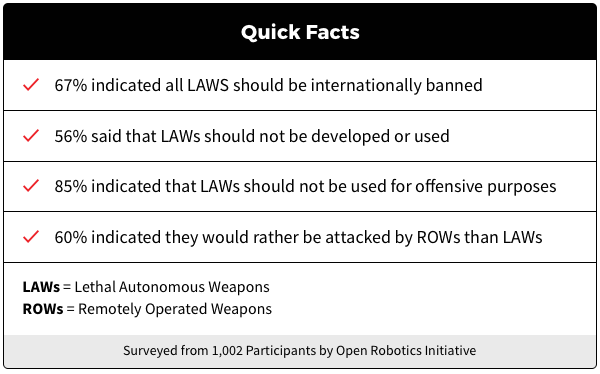

In 2015, the Open Robotics Initiative surveyed over 1,000 participants from 49 countries about LAWS; the goal of the survey was not to create a statistic for the general population within a single country, but rather, it was to capture a “snapshot” of the global perspective affecting the robotic revolution. The results indicate a global consensus towards these robotic advancements, even across cultural divides.

A little more than half of survey participants (56%) believe that LAWS should never be developed, and slightly more than that (67%) believe that LAWS should be banned from development or use. Further, a significant majority (71%) even believed that the military should not have access to the technology at all, and should instead maintain its use of remotely controlled weapons, such as drones.

A hefty majority (85%) also believed that LAWS should not be used in any offensive strategy or context. The results revealed what surveyors initially suspected - the public has a fear of robotics. But that also begs the question: Is that actually a bad thing?

"Film producers have gas-lighted the public's panicked perception of robotic advancements for decades..."

Film producers have gas-lighted the public's panicked perception of robotic advancement for decades; their movies are famous for it. Just look at iRobot as an example. The subsequent parallel rise of A.I. is necessary to actually govern the actions of those robots. Although the concept is frightening at first, the experts who are keeping an eye on the situation have expressed minimal concern for the current state of affairs. Their longer-term concern, however, is what may lead some to wonder whether legitimate safety concerns exist that aren’t made public. And that furthers the question of disclosure.

What Should The Perception Be?

Since its launch in April 2013, the Campaign to Stop Killer Robots has worked to develop an international ban on the development of LAWS. Campaign members have discussed precautionary measures, but they have yet to come to a viable solution. To find one, the United Nations created a Group of Governmental Experts (GGE) on LAWS. Unfortunately, according to Bonnie Docherty, J.D., the Associate Director of Armed Conflict and Civil Protection at Harvard Law School, the GGE’s meeting “concluded with a recommendation [to] merely continue discussions next year.”

"As of September 14, 2018, twenty more signatures had been acquired, including notable tech giants Elon Musk of SpaceX and Tesla, as well as the founders of Google's A.I. company, Deepmind."

On August 21, 2017, the Group of Governmental Experts (GGE) had their first scheduled meeting with nearly 120 executives from companies developing A.I. and robotics. Together, they composed and then published a bona fide manifesto dubbed “An Open Letter to the United Nations Convention on Certain Conventional Weapons.” In it, the letter begs the UN “to prevent an arms race in these weapons,” and to actively “protect civilians from their misuse” by “(avoiding) the destabilizing effects of these technologies,.” As of September 14, 2018, twenty more signatures had been acquired, including notable techies Elon Musk of SpaceX and Tesla, as well as the founders of Google’s A.I. company, DeepMind. According to these executives, it is their responsibility to raise the alarm about the development of LAWS and it would seem that such alarms have been raised.

"The DOD spent $149 million on the development of A.I. and robotics during 2015; that budget has since increased nearly 12,000% to $18 billion through 2020."

Current State of Robotic Affairs

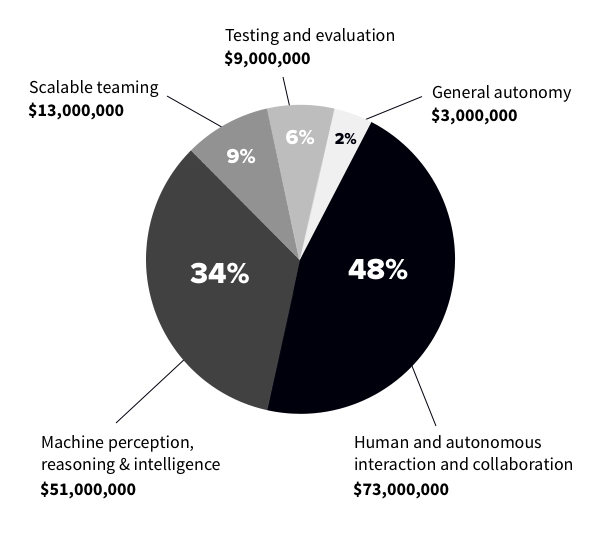

Dr. Vincent Boulanin, author of Mapping the development of autonomy in weapon systems, and researcher at Stockholm International Peace Research Institute (SIPRI), states the DoD spent $149 million on the development of A.I. and robotics during 2015; that budget has since increased nearly 12,000% to $18 billion through 2020. With so much invested in weaponry across the last decade, it might seem like enough to create a real life Robocop or Iron Man, but the the Department of Defense (DoD) has more pressing concerns. The first being how they can improve collaboration within human-systems and interaction. Their second priority is advancing machine learning (A.I.), which is closely followed by the development of “swarm minded” autonomous technology (and how it could be advanced and then integrated with LAWS). Ranked last on the DoD high-priority list is general autonomy and their testing, evaluation for LAWS. Similar to Oppenheimer and the development of the H-bomb, there is a technological arms race to develop LAWS — even prior to the construction of safety protocol and standards to govern it. That is where the global population’s fear may actually be rooted.

Is It Validated Paranoia?

While many of the robots portrayed in film or television tend to be malicious or otherwise sinister—The Terminator and Skynet, Marvel's villain Ultron, V.I.K.I. from iRobot, and countless others have become the faces to fear as current technologies seem influx with innovation and advancements.

However, not all movies present robotics or A.I. technology in such a negative light, and while the apocalyptic dystopias of deranged robots may sellout tickets on a Friday night, Director Chris Paine would like to change that stigma and offer a different interpretation of a future reality.

During an interview, he explained the reason he decided to produce the film Do You Trust This Computer, which is a documentary about A.I. and how it could affect our future.

“We went into the project with...an open mind about it and as our journey continued.” Due to the media’s portrayal of robots, there are multiple misunderstandings about A.I. and robotics in general; “our prognosis turned out to be darker than I expected” Paine recounts, reflecting on the difficulties he encountered trying to make the positive side of robotics known. In his book Our Robots, Ourselves: Robotics and the Myths of Autonomy, David Mindell, Ph.D, summarizes the three major myths surrounding robots and artificial intelligence.

"Mindell discusses the progression of robotic technology linearly as it transcends into the topic of autonomy — or "self-activation," a process that people engage in daily."

First, he addresses how robots can and cannot replace people's jobs — nobody should be too concerned with the Bicentennial Man competing in the next Iron Man competition, and whether that would be fair. Mindell discusses the progression of robotic technology linearly as it transcends into the topic of autonomy — or "self-activation," a process that people engage in daily. And while we take it for granted going about our daily routines, such functions are incredibly hard to program into A.I.

Aside from the irrational misconceptions that people harbor regarding the advancements in robotics, there are other more relevant and legal criteria to consider.

Some visionaries have imagined a potential future where not all the doctors who see patients are human, but Mindell argues that “automation changes the type of human involvement required” which does not disqualify a need for it. Mindell firmly believes that robotic innovation holds the potential to “transform” human interaction, specifying the nature of robotic autonomy as being “the highest expression of the technologies...that work most deeply, (and) fluidly with human beings," which portrays these robotic "beings" as something almost symbiotic, rather than existing in a technical limbo, trapped somewhere in between creation and life — between functionality and necessity.

Check out this article to find out more about the films that have changed our perceptions of robots over the years.

4449